Sun Shader

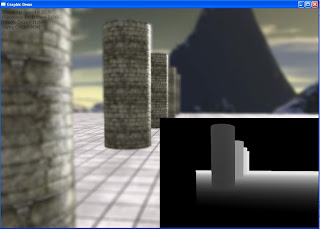

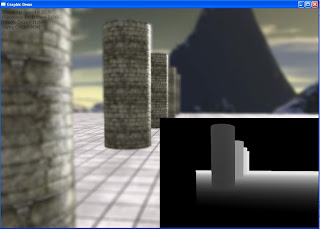

I also finish implementing Depth of field (or DOF). Here is a picture:

The lower right rectangle shows the depth test for the scene. I'll write about this two effects in details next blog. Take care for now.

My blood, sweat and tears :D.

void RenderIntoCubeMaps()

{

// Save the camera position

float xSave = gCamera->position().x;

float ySave = gCamera->position().y;

float zSave = gCamera->position().z;

// Set it to the reflection/refraction object position

// Here I put it at the origin (0, 0, 0)

gCamera->position().x = 0.0f;

gCamera->position().y = 0.0f;

gCamera->position().z = 0.0f;

// Prepare the projection matrix

D3DXMATRIX mProj;

D3DXMatrixPerspectiveFovLH(&mProj, D3DX_PI * 0.5f, 1.0f, 1.0f, 10000.0f);

// Store the current back buffer and z-buffer

LPDIRECT3DSURFACE9 pBackBuffer = NULL;

LPDIRECT3DSURFACE9 pZBuffer = NULL;

gd3dDevice->GetRenderTarget ( 0, &pBackBuffer );

if(SUCCEEDED( gd3dDevice->GetDepthStencilSurface( &pZBuffer ) ) )

gd3dDevice->SetDepthStencilSurface( g_pDepthCube );

for(DWORD nFace = 0; nFace < 6; nFace++)

{

// Standard view that will be overridden below

D3DXVECTOR3 vEnvEyePt = D3DXVECTOR3(0.0f, 0.0f, 0.0f);

D3DXVECTOR3 vLookatPt, vUpVec;

switch(nFace)

{

case D3DCUBEMAP_FACE_POSITIVE_X:

vLookatPt = D3DXVECTOR3(1.0f, 0.0f, 0.0f);

vUpVec = D3DXVECTOR3(0.0f, 1.0f, 0.0f);

break;

case D3DCUBEMAP_FACE_NEGATIVE_X:

vLookatPt = D3DXVECTOR3(-1.0f, 0.0f, 0.0f);

vUpVec = D3DXVECTOR3( 0.0f, 1.0f, 0.0f);

break;

case D3DCUBEMAP_FACE_POSITIVE_Y:

vLookatPt = D3DXVECTOR3(0.0f, 1.0f, 0.0f);

vUpVec = D3DXVECTOR3(0.0f, 0.0f,-1.0f);

break;

case D3DCUBEMAP_FACE_NEGATIVE_Y:

vLookatPt = D3DXVECTOR3(0.0f,-1.0f, 0.0f);

vUpVec = D3DXVECTOR3(0.0f, 0.0f, 1.0f);

break;

case D3DCUBEMAP_FACE_POSITIVE_Z:

vLookatPt = D3DXVECTOR3( 0.0f, 0.0f, 1.0f);

vUpVec = D3DXVECTOR3( 0.0f, 1.0f, 0.0f);

break;

case D3DCUBEMAP_FACE_NEGATIVE_Z:

vLookatPt = D3DXVECTOR3(0.0f, 0.0f,-1.0f);

vUpVec = D3DXVECTOR3(0.0f, 1.0f, 0.0f);

break;

}

D3DXMATRIX mView;

D3DXMatrixLookAtLH( &mView, &vEnvEyePt, &vLookatPt, &vUpVec );

LPDIRECT3DSURFACE9 pSurf;

g_apCubeMap->GetCubeMapSurface((D3DCUBEMAP_FACES) nFace, 0, &pSurf));

gd3dDevice->SetRenderTarget (0, pSurf); // Set the render target.

ReleaseCOM( pSurf ); // Safely release the surface.

// Clear the z-buffer

gd3dDevice->Clear( 0L, NULL, D3DCLEAR_ZBUFFER, 0x000000ff, 1.0f, 0L) ;

gd3dDevice->BeginScene();

// render code here....

gd3dDevice->EndScene();

// We're done, so restore the camera position

gCamera->pos().x = xSave;

gCamera->pos().y = ySave;

gCamera->pos().z = zSave;

// Restore the depth-stencil buffer and render target

if( pZBuffer )

{

gd3dDevice->SetDepthStencilSurface( pZBuffer );

ReleaseCOM( pZBuffer );

}

gd3dDevice->SetRenderTarget( 0, pBackBuffer );

ReleaseCOM( pBackBuffer );

}

Video:

Video: So in this demo I implement these graphic techniques:

So in this demo I implement these graphic techniques: So voila, a glass teapot ! It has both reflection and refraction properties just like the water.The violet color is due to I try to implement some rainbow color as the result of refracted white light. So here is the process:

So voila, a glass teapot ! It has both reflection and refraction properties just like the water.The violet color is due to I try to implement some rainbow color as the result of refracted white light. So here is the process:

// Look up the reflection

float3 reflVec = reflect(-toEyeW, normalW);

float4 reflection = texCUBE(EnvMapS, reflVec.xyz);

// We'll use Snell's refraction law

float cosine = dot(toEyeW, normalW);

float sine = sqrt(1 - cosine * cosine);

float sine2 = saturate(gIndexOfRefractionRatio * sine);

float cosine2 = sqrt(1 - sine2 * sine2);

float3 x = -normalW;

float3 y = normalize(cross(cross(toEyeW, normalW), normalW));

// Refraction

float3 refrVec = x * cosine2 + y * sine2;

float4 refraction = texCUBE(EnvMapS, refrVec.xyz);

float4 rainbow = tex1D(RainbowS, pow(cosine, gRainbowSpread));

float4 rain = gRainbowScale * rainbow * gBaseColor;

float4 refl = gReflectionScale * reflection;

float4 refr = gRefractionScale * refraction * gBaseColor;

return sine * refl + (1 - sine2) * refr + sine2 * rain + gLight.ambient/10.0f;

So as you can see from the picture above, the view ray from the eye to the pixel on the polygonal surface represent what we would have see if we use normal mapping. However, in the actual geometry, we would have see the pixel correspond to t-off instead. So how do we fix that ?

So as you can see from the picture above, the view ray from the eye to the pixel on the polygonal surface represent what we would have see if we use normal mapping. However, in the actual geometry, we would have see the pixel correspond to t-off instead. So how do we fix that ?